Self-efficacy (SE) refers to individuals' perception of their ability to perform specific activities based on their capacity to organize and execute action plans to attain a predetermined outcome (Bandura, 2010). SE is closely linked to skills and an individual's self-assessment of these skills within a particular domain, facilitated through intricate processes of self-persuasion (Bandura, 1993). This cognitive process draws from four primary sources: personal performance experiences, experiences derived from social models, social persuasion, and motivational and physiological states (Bandura, 2001; Maddux & Meier, 1995).

In this context, SE emerges as a vital theoretical construct influencing the development of professional competencies (Li et al., 2021). Consequently, the evaluation of this construct holds substantial significance. The perception of SE profoundly affects motivation strategies, encompassing the pursuit of specific objectives and emotional responses to challenging or uncomfortable situations (Tang et al., 2021; Wen et al., 2021).

Notably, in the realm of psychology, SE is integrated into the curricular guidelines for training psychologists, as stipulated by the National Curriculum Guidelines in Brazil, which emphasize enhancing principles fostering competence and commitment across various facets of professional development (Brazil, 2019). To succinctly encapsulate these principles, they revolve around healthcare, decision-making, communication, leadership, administration, management, and continuous education (Vieira-Santos, 2016).

Training within psychology programs instills a culture of self-assessment and promotes reflective practice mediated by feedback, originating from educators, mentors, or peers (Rodriguez et al., 2017). This pedagogical model accentuates the cultivation of diverse capacities to critically analyze and reflect on issues that may induce tension or discomfort during professional practice (Brazil, 2019). An essential facet of a psychologist's professional repertoire encompasses relational skills, which encompass social and emotional intelligence, and the aptitude to establish effective interpersonal connections. Psychologists need to develop their distinct professional and personal approaches to ethically and judiciously navigate their interactions with others (Chenneville & Schwartz-Mette, 2020; Irwin et al., 2020; Larson & Bradshaw, 2017; Mollen & Ridley, 2021; Rief, 2021).

Research has shown that SE can be assessed in psychology students, as demonstrated by Kaas (2018), who observed that students with advanced training at the onset of their undergraduate studies exhibited elevated SE levels. Furthermore, certain studies have revealed that increased engagement in intervention activities positively influences SE levels, as students perceive themselves as better prepared and motivated to undertake responsibilities associated with such activities (Milsom & McCormick, 2015; Mullen & Lambie, 2016). A recent investigation has also suggested that mindfulness may indirectly relate to the SE of psychologists and counselors (Latorre et al., 2021). In this study, professional competence was self-assessed through self-compassion, and the authors contend that novel approaches are requisite to assess the association between SE and professional competence using diverse metrics.

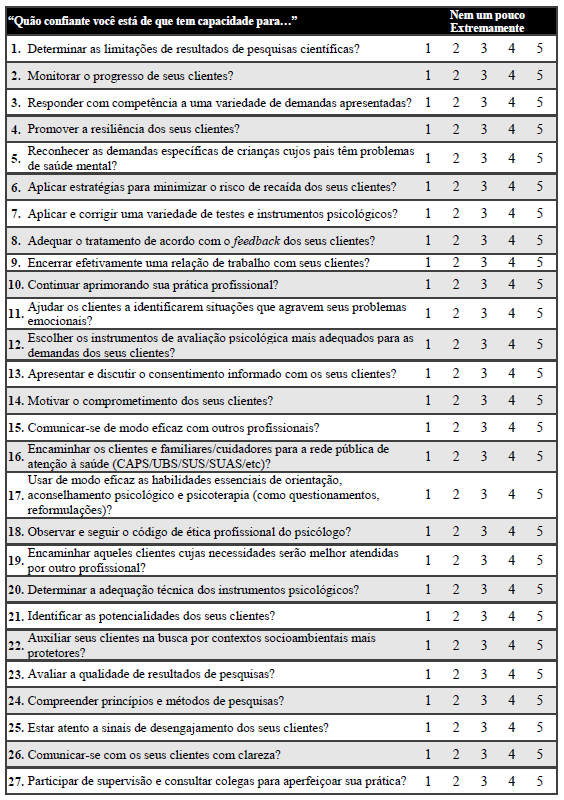

As elucidated earlier, the perception of SE can exert a substantial impact on the professional practice of psychology students and practitioners. Regrettably, specific instruments tailored for the precise evaluation of this construct remain unavailable in Brazil. However, Watt et al. (2019) developed and validated a self-report scale designated as the Psychologist and Counsellor Self-Efficacy Scale (PCES), designed to gauge SE in Australian psychologists based on essential skills and competencies characteristic of their profession. The PCES consists of 31 items graded on a Likert scale spanning from 1 (not at all) to 4 (extremely) and assesses five SE dimensions: (i) research; (ii) ethics; (iii) legal matters; (iv) assessment and measurement; and (v) intervention.

Given the evolving landscape in which psychology professionals operate, the development of self-administered instruments is imperative, aiding professionals responsible for the training of psychologists in gauging SE levels across various domains of professional practice. Furthermore, there exists a conspicuous absence of an SE scale specifically designed or adapted for the Brazilian context, further underscoring the urgency of further investigations in this realm.

The objectives of this study encompassed: (i) Translate, adapt, and evaluate the linguistic and semantic properties of the PCES for use among psychology students and psychologists in Brazil; (ii) Assess the psychometric attributes of the adapted PCES, including its construct validity and other related psychometric properties.

Method

Participants

The study sample comprised 2,007 individuals, with 132 students and 1,875 psychologists. The majority of participants were female residents of the Southeast region of Brazil, and nearly 60 % were married, with roughly half reporting having at least one child. Regarding education, which was assessed solely among psychologists, more than half held specialist qualifications, 20 % possessed master's degrees, and 5 % had completed doctorates. The average time since graduation was approximately 12 years.

Table 1: Sociodemographic characteristics among psychology students and psychologists

Note:N: number of participants; χ²: Chi-squared test; p: significance level; Cramer’s V: effect size calculated to nominal variables; M: Mean; SD: standard deviation; η²: effect size calculated using the eta-squared test. * p< .05 *** p< .00

For participation in the study, the criteria we set required individuals to be at least 18 years old. We specifically sought fourth-year psychology students who were currently involved in clinical practice. Additionally, it was imperative for participants to have access to the internet and to be Brazilian citizens, a detail we confirmed through IP address checks.

On the other hand, we identified certain factors that would exclude potential participants. These included instances where the questionnaire submissions were incomplete or filled incorrectly. Additionally, while the survey was open, we intended to focus solely on psychology students and practicing psychologists. Therefore, responses from individuals from other professional backgrounds were excluded. Similarly, even if a response came from a Brazilian citizen, if it originated outside Brazil's geographic boundaries and this could not be cross-verified through IP addresses, we deemed it unsuitable for inclusion.

Instruments

Participants initially provided sociodemographic information, including gender, region of residence in Brazil, marital status, number of children, and professional qualifications (among psychologists). Additionally, participants rated their perceived ability to handle various clinical demands, both in-person and virtually, on a scale ranging from 0 (totally insecure) to 10 (totally confident). This perception assessment was undertaken in alignment with existing psychometric validation studies employing perception as a component of convergent validity criteria (Andrade et al., 2020), particularly when alternative instruments are limited (Kline, 2015).

Psychologist and Counsellor Self-Efficacy Scale (PCES)

The PCES, originally developed in Australia (Watt et al., 2019), aims to assess psychosocial SE through 31 items categorized into five specific dimensions: (i) research; (ii) ethics; (iii) legal matters; (iv) assessment and measurement; and (v) intervention. The PCES was previously validated exclusively among Australian professionals, demonstrating high internal consistency (α = .94).

General Perceived Self-Efficacy Scale (GPSS)

The instrument assesses self-efficacy (SE) using ten items rated on a Likert scale, ranging from 1 to 4 points. The total score falls within a range of 10 to 40, with a higher score indicating a higher level of self-efficacy. Typical items include statements such as “Thanks to my resourcefulness, I know how to handle unforeseen situations” and “When confronted with a problem, I can usually find several solutions” (Schwarzer & Jerusalem, 1995). The original version of this instrument was developed in Germany by Schwarzer and Jerusalem in 1995 and has since been adapted for use in approximately 30 languages worldwide. It is widely recognized in the literature as one of the most frequently employed scales for assessing self-efficacy. Moreover, there is extensive evidence supporting its validity and reliability, drawn from research conducted with diverse populations across various countries (Scholz et al., 2002). The General Perceived Self-Efficacy Scale (GPSS) was adapted and validated for use in Brazil by Sbicigo et al. in 2012. This adaptation demonstrated good internal consistency, as indicated by a Cronbach's alpha coefficient of α = .85.

Procedures

The data collection process extended over a six-month period, during which participants had access to the online questionnaire. On average, participants took about 25 minutes to complete the questionnaire comprehensively. The study adhered to the Declaration of Helsinki and received approval from the Research Ethics Committee of the Pontificia Universidade Católica de Campinas (protocol number 33780620.3.0000.5481).

Cultural adaptation

The PCES was initially translated into Brazilian Portuguese by two bilingual psychologists. These translations were subsequently compared for semantics, concepts, linguistic nuances, item context, and idiomatic expressions. The primary researchers and translators collaborated to finalize the translation of items, adhering to specific cross-cultural adaptation and validation protocols outlined by the International Test Commission (ITC, 2005).

Pilot study

A pilot study, encompassing 10 students and 10 psychologists, was conducted to assess the conceptual, linguistic, and semantic equivalence of the PCES. This step facilitated participant comprehension of all PCES items. Any difficulties encountered in item comprehension were thoroughly discussed with the researchers, yet participants did not report any significant challenges in understanding the items.

Reverse translation

The PCES was subsequently back-translated into English by two independent translators who were not involved in the initial translation phase, in accordance with specific guidelines (Wild et al., 2005). The synthesized back-translations were consolidated by the researchers and submitted to the PCES developers (Watt et al., 2019) for comparison with the original PCES version. Final Brazilian’ PCES version on the Appendix A.

Data collection

The PCES was hosted on the SurveyMonkey® platform, and the survey link was disseminated via various social networks and email. Participants could only complete the questionnaire once, with this restriction enforced based on IP addresses. Prior to commencing the questionnaire, all participants were required to read and consent to the Free and Informed Consent Form, accessible on the study website's homepage.

Data analysis

Sociodemographic data

Sociodemographic data were analyzed based on variable characteristics, with nominal variables assessed using the chi-square test (χ2) and continuous variables evaluated using one-way analysis of variance (one-way ANOVA). Effect size calculations were subsequently performed using Cramer's V or eta squared for nominal and continuous variables (De Almeida Lins et al., 2022; Lopes et al., 2022)

Internal consistency and factor structure

Internal consistency of the PCES was evaluated using Cronbach's alpha (α) and McDonald's omega (ω) coefficients. The utilization of both coefficients enabled performance comparison, ensuring higher analytical quality and reduced risk of bias in results. To assess factor structure, Confirmatory Factor Analysis (CFA) was conducted to determine if the original five factors of the PCES were retained (Watt et al., 2019). The Diagonal Weighted Least Squares (DWLS) estimator with robust error calculation was employed (Gaderman et al., 2012; Spritzer et al., 2022). Good model fit criteria included: Standardized Root Mean Square Residual (SRMR) less than .05; Tucker-Lewis Index (TLI) greater than .95; Root Mean Square Error of Approximation (RMSEA) less than .08; Comparative Fit Index (CFI) greater than .95; and a Chi-squared/degrees of freedom ratio (χ²/df) less than 3. These criteria were selected based on established guidelines (Cheung & Rensvold, 2002). To achieve the best-fit factor structure, all Modification Indices (MI) exceeding 10 (MI > 10.00) were analyzed, with consideration given solely to item covariances within the same dimension (Andrade et al., 2023a; Andrade et al., 2023b).

Following the establishment of the optimal factor structure (Model 3), a Multigroup Confirmatory Factor Analysis (MGCFA) was executed to assess measurement invariance across gender (male (n= 450) and female (n= 1,557)), participant profile (psychology students (n= 132) and psychologists (n= 1,887)), and age. Participants' ages were categorized as per guidelines from the Brazilian Institute of Geography and Statistics (Instituto Brasileiro de Geografia e Estatística, 2020): (i) young adults (18 to 29 years, n = 262); (ii) adults (30 to 59 years, n = 1,484); and (iii) elderly (60 years or older, n = 261). Measurement invariance was considered present if differences in RMSEA and CFI values (Δ RMSEA and ΔCFI) were less than .02 and .01, respectively (Cheung & Rensvold, 2002).

Network Analysis (NA) was also conducted to estimate the clustering between PCES items (nodes) through partial correlations. NA enabled the identification of item grouping patterns and comparison with data derived from CFA and MGCFA. Key data from the 31 PCES items provided by NA included those with the highest number of connections with other items (betweenness centrality), the strength of these connections (degree centrality), the distance between nodes (closeness centrality), and variables indicative of the most influential factors in the network (expected influence) (Epskamp et al., 2012). LASSO (Least Absolute Shrinkage and Selection Operator) was used to construct graphs (Figures 1A and 1B) due to its ability to estimate correlations between PCES items (nodes) through partial correlations. This approach mitigates overfitting by excluding low-magnitude correlations (De Oliveira Pinheiro et al., 2022). In the graphical representation, nodes are depicted in green (positive correlation) or red (negative correlation), with line thickness indicating the strength of correlations between items. Item positioning within the graph's center is determined by the intensity and quantity of associations with other PCES items.

Convergent validity

Convergent validity was assessed through Spearman correlation analysis, examining the total PCES score in relation to PGSES and participants' subjective perception of their confidence in handling various specific demands typical of psychologists (as described in the instruments section).

Results

Sociodemographic data

As depicted in Table 1, significant differences in PCES total scores and four dimensions were identified between students and psychology professionals, with the exception of the assessment and measurement dimension. Gender, however, did not yield significant differences in the total PCES score and all its dimensions.

Factor structure and internal consistency

Regarding the factor structure of PCES (Table 2), the initial model indicated adequate factor adjustment based on the considered fit indices, confirming the instrument's original structure (Watt et al., 2019). Nevertheless, the factor structure was improved by incorporating modification indices aligned with the scale structure. Consequently, new modification index results were integrated into the second model, culminating in the final structure (Model 3), which exhibited superior fit indices compared to the previous models. Additionally, the χ²/gl ratio decreased, further enhancing the overall fit. The Multigroup Confirmatory Factor Analysis (MGCFA) also indicated favorable model fits when comparing the factor structure of PCES across gender, age groups, and professional profiles. Consequently, the identified levels of invariance implied that the instrument is suitable for use in various populations.

Table 2: Confirmatory factor analysis and multigroup confirmatory factor analysis comparing gender, age (young people, adults, elderly) and profile (psychology student and psychologist)

Note: CFA: Confirmatory Factor Analysis; RMSEA: Root-mean-square error of approximation; TLI: Tucker-Lewis Index: SRMR: Standardized root-mean-square residual; CFI: Comparative Fit Index; MGCFA: Multigroup Confirmatory Factor Analysis.

Table 3 presents the factor loadings of items categorized by their respective factors and the reliability indices of the PCES scale. In general, the data indicated a high level of instrument reliability, as determined through Cronbach's α (α = .951) and McDonald's ω (ω = .952) analyses. Notably, the intervention factor exhibited the highest reliability indices, while the research factor displayed the lowest indices.

Network Analysis

Figure 1 displays Gaussian graphical models for the five factors of PCES among psychology students (Figure 1A) and psychologists (Figure 1B). No significant differences were detected in the network structures between these two participant groups, as the distribution of items appeared similar. Notably, the legal matters dimension exhibited robust correlations among item pairs in both groups. Moreover, among psychologists, a strong correlation was observed between items 28 and 29. Additionally, item 17 displayed the highest centrality among students, whereas among psychologists, item 8 held the highest centrality (Figure 1C).

Figure 1: Gaussian graphical model based on network analysis according to the 31-items of PCES for students (1A) and psychologists (1B). The four centrality measures of the items are described in Figure 1C

Convergent validity

Regarding the correlation levels with the PCES instrument (Table 4), the total instrument score exhibited positive correlations with all the presented variables. Notably, the intervention factor displayed the highest correlation strength among all the scale factors. A weak correlation was observed between the Perceived General Self-Efficacy Scale (PGSES) and the total PCES score. Furthermore, the total PCES score exhibited moderate correlations with certain therapist skills, particularly in face-to-face care situations. When assessing correlation indices between factors, the intervention factor demonstrated the highest frequency of moderate correlations with other evaluated items.

Discussion

The primary objective of this study was to translate, adapt, and investigate the psychometric validity of PCES in Brazil among both psychology students and psychologists. This study marks the first instance in the literature where this instrument has been adapted beyond its country of origin (Watt et al., 2019). Regarding sociodemographic characteristics, the majority of the sample comprised women. However, no significant gender-based differences were identified in PCES scores. A meta-analysis encompassing 247 studies (N= 68,429) evaluated the gender gap in academic self-efficacy (SE). The findings revealed that women exhibited greater SE in language-related areas than men, particularly in analytical data (Huang et al., 2013). The analysis also highlighted that gender disparities in academic SE varied with age, with the most significant effect observed among participants over 23 years of age.

In the present study, the sample primarily consisted of psychologists who had already graduated, with 1,536 reporting some form of continuing education, such as specialization, master's, or doctoral degrees. A study published by the São Paulo Regional Council of Psychology (Conselho Regional de Psicologia de São Paulo, 2004) revealed that 49 % of psychologists reported pursuing specialization as part of their continuing education, focusing on clinical, organizational, master's, or doctoral specializations. Lisboa and Barbosa (2009) noted that the increasing demand for postgraduate courses stemmed from deficiencies in undergraduate training. Considering SE as a complex cognitive process influenced by personal performance experiences (Bandura, 2001), it is plausible that postgraduate programs enhance professionals' knowledge and contribute to their SE perception.

The key psychometric results indicated the semantic, idiomatic, and conceptual equivalence of the Brazilian-adapted PCES in comparison to its original version. CFA indicated a satisfactory model fit, particularly after making adjustments based on modification indices, corroborating the original five-factor structure. Multigroup Confirmatory Factor Analysis (MGCFA) further affirmed this adequacy across gender, professional status (student or professional), and participant age. The internal consistencies in this study (α = .951; ω = .952) exceeded those of the original version α = .88 (Watt et al., 2019). Notably, the uneven distribution of items across factors posed a limitation, affecting Cronbach's alpha, while McDonald's Omega, based on factor loadings, provided a more stable estimate of internal consistency (Peixoto & Ferreira-Rodrigues, 2019). From a practical standpoint, daily interventions, which constitute a significant aspect of psychology practice, may contribute to the high reliability observed in the intervention dimension (Kaas, 2018; Mullen & Lambie, 2016)

NA revealed certain similarities with the original instrument. In the original version (Watt et al., 2019), the intervention dimension exhibited stronger associations with the ethics dimension, a logical alignment given the interplay between ethics and intervention in psychologists' daily routines. Conversely, the legal matters dimension displayed weaker associations, consistent with the original findings (Watt et al., 2019), which is reflected in Figure 1. NA also indicated that complex issues, such as Brazilian legislation and strategies for managing anger, violence, self-mutilation, suicide, and substance abuse, require greater professional experience and tend to be interconnected. Moreover, feedback regarding treatment adequacy, as per client responses (IT-8), demands more seasoned professionals and consequently correlates with higher SE among participants. Importantly, success in a task hinges not only on possessing requisite skills but also on event control, where SE beliefs influence motivation, goal setting, effort application, and persistence in the face of challenges and setbacks (Bandura, 1997; Koehler & Mata, 2021). Previous research has shown that workers' SE predicts their work involvement and performance (Consiglio et al., 2016; Tian et al., 2019).

This study has some limitations worth noting. First, the evaluation of the PCES was conducted on a non-probabilistic sample, which might influence the generalizability of the findings to the broader population of psychology students and psychologists. Second, the sample had fewer psychology students compared to psychologists, which might have affected the instrument's validity for the student population. However, on the positive side, our study boasts a significantly larger sample size compared to most psychometric validation studies. Additionally, we employed sophisticated statistical analyses, such as MGCFA and NA, that provided deeper insights into the invariance and centrality of the instrument.

In summary, the findings suggest that PCES is a reliable and easily applicable instrument for assessing SE among psychology students and psychologists in Brazil. In future studies, the scale may be employed to investigate the SE of this population within various professional contexts, contributing to the enhancement of education and training programs. Subsequent psychometric studies could further expand on PCES' properties, including accuracy validity, criterion validity, and predictive validity, utilizing specific methodological designs.